You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

good model

- Thread starter Artur

- Start date

There are 3 main common criteria's when evaluating the quality of predictive models:

1. Are the predictions accurate? We are interested in the height of the lift curve. Lift is a measure of the performance of a targeting model (association rule) at predicting or classifying cases as having an enhanced response (with respect to the population as a whole), measured against a random choice targeting model. For example, suppose a population has an average response rate of 5%, but a certain model has identified a segment with a response rate of 20%. Then that segment would have a lift of 4.0 (20%/5%).

2. What are the "insights" that you gained from the model and does it make sense?

When creating new models, a large amount of time is always invested in validating the variable used inside the model. Checking variables is important because, if you don’t do it, you might end-up in the situation where you seem to have a very good predictive model but, when you use the model in production, you end up with a random selection. It’s thus very important to have an easily comprehensible model with a few variables to check.

Let’s take look at he following example:

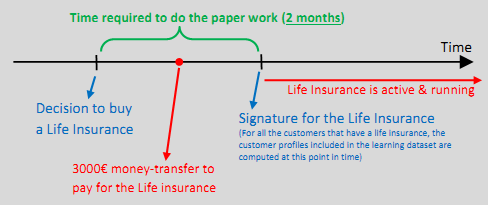

A large bank is selling a “Life Insurance” product. The objective of the

predictive model is to detect the people interested in the “Life Insurance” product and to create a model, a student used a learning dataset containing:

• For the people that have a “Life Insurance” contract: the profile of the customers just before they signed the “Life Insurance” contract (and the target is “1”).

• For the people that don’t have a “Life Insurance” contract: the latest update of the profile of the customers (and the target is “0”).

The student obtained a good predictive model (lift above 7). The predictive model used several variables but one of them caught our attention: “Is there recently an outgoing money transfer for a value of 3000$?”. If this variable is “true”, then the probability to get a “Life Insurance” product increases substantially.

To understand why this variable appears inside our predictive model, let’s have a look

at this chart:

If you pay attention to the above chart, you’ll quickly notice that there is a problem

with the modeling methodology: The student is using a customer profile (inside the

learning dataset) that is at the exact time of the signature of the “Life Insurance”

contract.

Obviously, this profile is erroneous: the variable “Is there recently an outgoing money transfer for a value of 3000€?” will generally be “true” for the people that bought a Life Insurance because it’s a consequence of the purchase.

By changing the observation date, the correction is simple once we detected the error in our modeling methodology.

Unfortunately, spotting the error is difficult if you don’t have the right tool that creates very complex models including hundreds of variables.

If you deploy this model in production, you’ll end-up with a marketing campaign very close to the “random selection”

3. Over-fitting: in a model, it describes random error or noise instead of the underlying relationship. Overfitting occurs when a model is excessively complex, such as having too many parameters relative to the number of observations. A model that has been overfitted has poor predictive performance, as it overreacts to minor fluctuations in the training data.

The control of over-fitting is automated in TIMi spending 90% of its computation time running an algorithm to control over-fitting. There are usually no over-fitting in a TIMi-model. When the

exception occurs, the user can change a parameter in the TIMi interface (the "min-bin-size"

parameter) to remove the "over-fitting".

Since over-fitting is important, each time TIMi delivers a new predictive model, it also generates a lift chart that allows to directly & precisely see if there is some over-fitting (or not...).

1. Are the predictions accurate? We are interested in the height of the lift curve. Lift is a measure of the performance of a targeting model (association rule) at predicting or classifying cases as having an enhanced response (with respect to the population as a whole), measured against a random choice targeting model. For example, suppose a population has an average response rate of 5%, but a certain model has identified a segment with a response rate of 20%. Then that segment would have a lift of 4.0 (20%/5%).

2. What are the "insights" that you gained from the model and does it make sense?

When creating new models, a large amount of time is always invested in validating the variable used inside the model. Checking variables is important because, if you don’t do it, you might end-up in the situation where you seem to have a very good predictive model but, when you use the model in production, you end up with a random selection. It’s thus very important to have an easily comprehensible model with a few variables to check.

Let’s take look at he following example:

A large bank is selling a “Life Insurance” product. The objective of the

predictive model is to detect the people interested in the “Life Insurance” product and to create a model, a student used a learning dataset containing:

• For the people that have a “Life Insurance” contract: the profile of the customers just before they signed the “Life Insurance” contract (and the target is “1”).

• For the people that don’t have a “Life Insurance” contract: the latest update of the profile of the customers (and the target is “0”).

The student obtained a good predictive model (lift above 7). The predictive model used several variables but one of them caught our attention: “Is there recently an outgoing money transfer for a value of 3000$?”. If this variable is “true”, then the probability to get a “Life Insurance” product increases substantially.

To understand why this variable appears inside our predictive model, let’s have a look

at this chart:

If you pay attention to the above chart, you’ll quickly notice that there is a problem

with the modeling methodology: The student is using a customer profile (inside the

learning dataset) that is at the exact time of the signature of the “Life Insurance”

contract.

Obviously, this profile is erroneous: the variable “Is there recently an outgoing money transfer for a value of 3000€?” will generally be “true” for the people that bought a Life Insurance because it’s a consequence of the purchase.

By changing the observation date, the correction is simple once we detected the error in our modeling methodology.

Unfortunately, spotting the error is difficult if you don’t have the right tool that creates very complex models including hundreds of variables.

If you deploy this model in production, you’ll end-up with a marketing campaign very close to the “random selection”

3. Over-fitting: in a model, it describes random error or noise instead of the underlying relationship. Overfitting occurs when a model is excessively complex, such as having too many parameters relative to the number of observations. A model that has been overfitted has poor predictive performance, as it overreacts to minor fluctuations in the training data.

The control of over-fitting is automated in TIMi spending 90% of its computation time running an algorithm to control over-fitting. There are usually no over-fitting in a TIMi-model. When the

exception occurs, the user can change a parameter in the TIMi interface (the "min-bin-size"

parameter) to remove the "over-fitting".

Since over-fitting is important, each time TIMi delivers a new predictive model, it also generates a lift chart that allows to directly & precisely see if there is some over-fitting (or not...).